Rate Limiting in System Design

Last Updated : 07 Nov, 2024

A key idea in system design is rate restriction, which regulates the flow of requests or traffic through a system. It is essential to avoid overload, boost security, and improve speed. In order to guarantee the stability and dependability of a system, this article helps to understand the significance of rate limiting in system design, the different rate-limiting methodologies and algorithms, and the efficient implementation of rate limiting.

What is Rate Limiting?

Rate restriction is a technique used in system architecture to regulate how quickly a system processes or serves incoming requests or actions. It limits the quantity or frequency of client requests to prevent overload, maintain stability, and ensure fair resource distribution.

- Rate limiting reduces the possibility of resource abuse and denial-of-service (DoS) attacksby placing restrictions on the maximum number of requests that can be made in a given amount of time.

- To guarantee the best possible performance, dependability, and security of systems, rate limiting is frequently used in a variety of scenarios, including web servers, APIs, network traffic management, and database access.

What is a Rate Limiter?

A rate limiter is a component that controls the rate of traffic or requests to a system. It is a specific implementation or tool used to enforce rate-limiting.

Use Cases of Rate Limiting

Below are the use cases of Rate Limiting:

- API Rate Limiting: APIs commonly employ rate limitation to control the volume of client requests, ensure fair access to resources, and prevent abuse.

- Web Server Rate Limiting: Web servers employ rate limitation as a defense against denial-of-service attacks and to prevent server overload.

- Database Rate limitation: To keep the database server from experiencing undue strain and to preserve database performance, rate limitation is applied to database queries. For instance, to avoid resource exhaustion and guarantee seamless functioning, an e-commerce website can restrict the quantity of database queries per user.

- Login Rate restriction: To stop password guessing and brute-force assaults, login systems employ rate restriction. Systems can prevent unwanted access by restricting the quantity of login attempts made by each person or IP address.

Types of Rate Limiting

Below are the main types of rate limiting:

1. IP-based Rate Limiting

The number of requests that can be sent from a single IP address in a specified amount of time is limited by IP-based rate limitation (e.g., 10 requests per minute). By restricting the amount of traffic that can originate from a single IP address, it is frequently used to stop abuses like bots and denial-of-service attacks.

- Advantages:

- It’s simple to implement at both the network and application level.

- If someone is trying to flood the system with requests, limiting their IP can prevent this.

- Limitations:

- Attackers can use techniques like VPNs, proxy servers, or even botnets to spoof different IPs and get around the limit.

- If multiple users share the same IP address (like in a corporate network), a legitimate user could get blocked if someone else on the same network exceeds the limit.

For Example: An online retailer might set a limit of 10 requests per minute per IP address to prevent bots from scraping product data. This ensures that bots can’t steal data at scale, while regular users can still shop without interruption.

2. Server-based Rate Limiting

The number of requests that can be sent to a particular server in a predetermined period of time (e.g., 100 requests per second) is controlled by server-based rate restriction.

- Advantages:

- Helps protect the server from being overwhelmed, especially during peak usage.

- By limiting requests per server, you make sure that no single user can monopolize resources and degrade the experience for others.

- Limitations:

- If attackers send requests across different servers, they might avoid hitting the rate limit on any single one.

- If the limit is too low or the server is under heavy load, even legitimate users might face delays or blocks.

For Example: A music streaming service might implement server-based rate limiting to prevent their API from being overloaded during rush hours. By setting a limit of 100 requests per second per server, they can ensure the service stays fast and responsive even when usage spikes.

3. Geography-based Rate Limiting

Geography-based rate limiting restricts traffic based on the geographic location of the IP address. It’s useful for blocking malicious requests that originate from certain regions (for example, countries known for high levels of cyber attacks), or for complying with regional laws and regulations.

- Advantages:

- If you know certain regions are the source of a lot of bad traffic (e.g., spam, hacking attempts), you can limit requests from those areas.

- Helps comply with local data protection laws or restrictions on content in certain countries.

- Limitations:

- Attackers can use VPNs or proxy servers to disguise their actual location and get around geography-based limits.

- Users traveling or accessing services via international servers (like employees using VPNs or customers in hotels) might get blocked if they’re in a region that's restricted.

For Example: A social media platform might implement geography-based rate limiting to fight spam. If a certain region is known for having a lot of fake accounts or bot activity, the platform could set a rule where IP addresses from that region can only make 10 requests per minute.

How Rate Limiting Works?

The number of queries a user or system can make to a service in a predetermined period of time can be managed by rate limitation. A service might permit 100 requests per minute, for instance. Any additional requests will be blocked or slowed down by the system until the time window is reset once that limit is reached.

- This helps prevent things like abuse, bot attacks, or overloading the server, while also ensuring that all users get a fair chance to access the service.

- It's often done using different methods like token bucket or sliding window, but the goal is always the same: to keep the system running smoothly and protect it from excessive traffic.

Let's understand the rate limiting algorithms to know how does it works using different algorithms:

Rate Limiting Algorithms

Several rate limiting algorithms are commonly used in system design to control the rate of incoming requests or actions. Below are some popular rate limiting algorithms:

1. Token Bucket Algorithm

- The token bucket algorithm allocates tokens at a fixed rate into a "bucket."

- Each request consumes a token from the bucket, and requests are only allowed if there are sufficient tokens available.

- Unused tokens are stored in the bucket, up to a maximum capacity.

- This algorithm provides a simple and flexible way to control the rate of requests and smooth out bursts of traffic

Below is the implementation of token bucket algorithm in python:

Python import time class TokenBucket: def __init__(self, capacity, refill_rate): self.capacity = capacity # max number of tokens self.tokens = capacity # current number of tokens self.refill_rate = refill_rate # tokens added per second self.last_refill_time = time.time() def refill(self): current_time = time.time() elapsed_time = current_time - self.last_refill_time # Refill the bucket based on elapsed time new_tokens = elapsed_time * self.refill_rate self.tokens = min(self.capacity, self.tokens + new_tokens) self.last_refill_time = current_time def allow_request(self): self.refill() if self.tokens >= 1: self.tokens -= 1 return True return False # Example usage: bucket = TokenBucket(5, 1) # 5 tokens max, refill 1 token per second for _ in range(10): if bucket.allow_request(): print("Request allowed") else: print("Request denied") time.sleep(0.5) # Simulate request interval 2. Leaky Bucket Algorithm

- The leaky bucket algorithm models a bucket with a leaky hole, where requests are added at a constant rate and leak out at a controlled rate.

- Incoming requests are added to the bucket, and if the bucket exceeds a certain capacity, excess requests are either delayed or rejected.

- This algorithm provides a way to enforce a maximum request rate while allowing some burstiness in traffic.

.webp)

Below is the implementation of leaky bucket algorithm in python:

Python import time class LeakyBucket: def __init__(self, capacity, leak_rate): self.capacity = capacity # max bucket size self.water = 0 # current amount of "water" in the bucket self.leak_rate = leak_rate # rate at which water leaks per second self.last_time = time.time() def leak(self): current_time = time.time() elapsed_time = current_time - self.last_time self.water = max(0, self.water - elapsed_time * self.leak_rate) self.last_time = current_time def allow_request(self): self.leak() if self.water < self.capacity: self.water += 1 # Add 1 unit of "water" for each request return True return False # Example usage: bucket = LeakyBucket(5, 1) # max 5 requests, leak 1 per second for _ in range(10): if bucket.allow_request(): print("Request allowed") else: print("Request denied") time.sleep(0.5) # Simulate request interval 3. Fixed Window Counting Algorithm

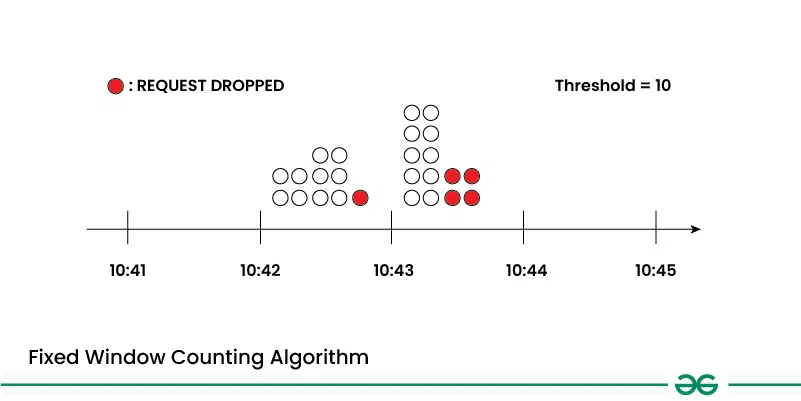

- The fixed window counting algorithm tracks the number of requests within a fixed time window (e.g., one minute, one hour).

- Requests exceeding a predefined threshold within the window are rejected or delayed until the window resets.

- This algorithm provides a straightforward way to limit the rate of requests over short periods, but it may not handle bursts of traffic well.

Below is the implementation of fixed window counting algorithm in python:

Python import time class FixedWindowCounter: def __init__(self, limit, window_size): self.limit = limit # max requests allowed self.window_size = window_size # time window in seconds self.count = 0 # current count of requests in the window self.start_time = time.time() def allow_request(self): current_time = time.time() if current_time - self.start_time > self.window_size: # Reset the window self.start_time = current_time self.count = 0 if self.count < self.limit: self.count += 1 return True return False # Example usage: window = FixedWindowCounter(5, 10) # 5 requests per 10 seconds for _ in range(10): if window.allow_request(): print("Request allowed") else: print("Request denied") time.sleep(2) # Simulate request interval 4. Sliding Window Log Algorithm

- The sliding window log algorithm maintains a log of timestamps for each request received.

- Requests older than a predefined time interval are removed from the log, and new requests are added.

- The rate of requests is calculated based on the number of requests within the sliding window.

- This algorithm allows for more precise rate limiting and better handling of bursts of traffic compared to fixed window counting.

Below is the implementation of sliding window log algorithm in python:

Python import time from collections import deque class SlidingWindowLog: def __init__(self, limit, window_size): self.limit = limit # max requests allowed self.window_size = window_size # time window in seconds self.requests = deque() # stores timestamps of requests def allow_request(self): current_time = time.time() # Remove requests outside the window while self.requests and self.requests[0] <= current_time - self.window_size: self.requests.popleft() if len(self.requests) < self.limit: self.requests.append(current_time) return True return False # Example usage: sliding_window = SlidingWindowLog(5, 10) # 5 requests per 10 seconds for _ in range(10): if sliding_window.allow_request(): print("Request allowed") else: print("Request denied") time.sleep(2) # Simulate request interval Client-Side vs. Server-Side Rate Limiting

Below are the differences between Client-Side and Server-Side Rate Limiting:

Aspect | Client-Side Rate Limiting | Server-Side Rate Limiting |

|---|

Location of Enforcement | Enforced by the client application or client library. | Enforced by the server infrastructure or API gateway. |

|---|

Request Control | Requests are throttled or delayed before reaching the server. | Requests are processed by the server, which decides whether to accept, reject, or delay them based on predefined rules. |

|---|

Flexibility | Limited flexibility as it relies on client-side implementation and configuration. | Offers greater flexibility as rate limiting rules can be centrally managed and adjusted on the server side without client-side changes. |

|---|

Security | Less secure as it can be bypassed or manipulated by clients. | More secure as enforcement is centralized and controlled by the server, reducing the risk of abuse or exploitation. |

|---|

Scalability | May impact client performance and scalability, especially in distributed environments with a large number of clients. | Better scalability as rate limiting can be applied globally across all clients and adjusted dynamically based on server load and resource availability. |

|---|

Rate Limiting in Different Layers of the System

Below is how Rate Limiting can be applied at different layers of the system:

- Application Layer:

- Implementing rate restriction logic inside the application code itself is known as rate limitation at the application layer.

- It is applicable to all requests that the application processes, irrespective of where they come from or end up.

- API Gateway Layer:

- Setting up rate limiting rules inside the API gateway infrastructure is known as rate limitation at the API gateway layer.

- It covers incoming requests that the API gateway receives prior to forwarding them to services further down the line.

- Service Layer:

- Rate limiting at the service layer involves implementing rate limiting logic within individual services or microservices.

- It applies to requests processed by each service independently, allowing for fine-grained control and customization.

- Database Layer:

- Rate limiting at the database layer involves controlling the rate of database queries or transactions.

- It applies to database operations performed by the application or services, such as read and write operations.

Challenges of Rate Limiting

Below are the challenges of Rate Limiting:

- Latency: Rate limitation has the potential to cause latency, particularly when requests are throttled or delayed as a result of exceeding rate constraints.

- False Positives: If the rate limiting logic is flawed or the rate restrictions are very restrictive, rate limiting may unintentionally block valid requests. False positives may cause users to become frustrated and experience service interruptions.

- Configuration Complexity: It can be difficult to set up rate limiting rules and thresholds, particularly in systems with a variety of traffic patterns and use cases.

- Scalability Challenges: If not appropriately scaled, rate limiting methods themselves may create a bottleneck under excessive load. One of the biggest challenges is making sure rate-limiting systems can manage growing traffic levels without seeing any degradation in performance.