Word Embeddings are numeric representations of words in a lower-dimensional space, that capture semantic and syntactic information. They play a important role in Natural Language Processing (NLP) tasks. Here, we'll discuss some traditional and neural approaches used to implement Word Embeddings, such as TF-IDF, Word2Vec, and GloVe.

Above images represent the Process and an Example of Word Embeddings in Natural Language Processing.

What is Word Embedding in NLP?

Word Embedding is an approach for representing words and documents. Word Embedding or Word Vector is a numeric vector input that represents a word in a lower-dimensional space.

- Method of extracting features out of text so that we can input those features into a machine learning model to work with text data.

- It allows words with similar meanings to have a similar representation. Thus, Similarity can be assessed based on Similar vector representations.

- High Computation Cost: Large input vectors will mean a huge number of weights. Embeddings help to reduce dimensionality.

- Preserve syntactical and semantic information.

- Some methods based on Word Frequency are Bag of Words (BOW), Count Vectorizer and TF-IDF.

Need for Word Embedding?

- To reduce dimensionality.

- To use a word to predict the words around it.

- Helps in enhancing model interpretability due to numerical representation.

- Inter-word semantics and similarity can be captured.

How are Word Embeddings used?

- They are used as input to machine learning models.

Words ----> Numeric representation ----> Use in Training or Inference.

- To represent or visualize any underlying patterns of usage in the corpus that was used to train them.

Let's take an example to understand how word vector is generated by taking emotions which are most frequently used in certain conditions and transform each emoji into a vector and the conditions will be our features.

In a similar way, we can create word vectors for different words as well on the basis of given features. The words with similar vectors are most likely to have the same meaning or are used to convey the same sentiment.

Approaches for Text Representation

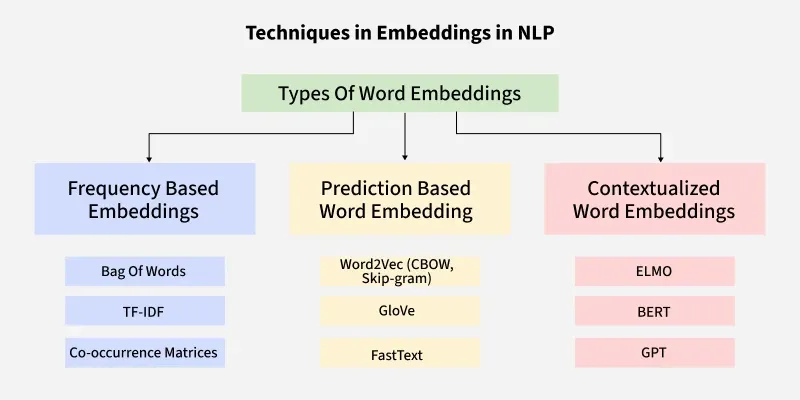

Techniques in Embeddings in NLP

Techniques in Embeddings in NLP1. Traditional Approach

The conventional method involves compiling a list of distinct terms and giving each one a unique integer value or id, and after that, insert each word's distinct id into the sentence. Every vocabulary word is handled as a feature in this instance. Thus, a large vocabulary will result in an extremely large feature size. Common traditional methods include:

1.1 One-Hot Encoding

One-hot encoding is a simple method for representing words in natural language processing (NLP). In this encoding scheme, each word in the vocabulary is represented as a unique vector, where the dimensionality of the vector is equal to the size of the vocabulary. The vector has all elements set to 0, except for the element corresponding to the index of the word in the vocabulary, which is set to 1.

Following are the disadvantages:

- High-dimensional vectors, Computationally expensive and Memory-intensive

- Does not capture Semantic Relationships

- Restricted to the seen training vocabulary

Python def one_hot_encode(text): words = text.split() vocabulary = set(words) word_to_index = {word: i for i, word in enumerate(vocabulary)} one_hot_encoded = [] for word in words: one_hot_vector = [0] * len(vocabulary) one_hot_vector[word_to_index[word]] = 1 one_hot_encoded.append(one_hot_vector) return one_hot_encoded, word_to_index, vocabulary example_text = "cat in the hat dog on the mat bird in the tree" one_hot_encoded, word_to_index, vocabulary = one_hot_encode(example_text) print("Vocabulary:", vocabulary) print("Word to Index Mapping:", word_to_index) print("One-Hot Encoded Matrix:") for word, encoding in zip(example_text.split(), one_hot_encoded): print(f"{word}: {encoding}") Output:

Vocabulary: {'mat', 'the', 'bird', 'hat', 'on', 'in', 'cat', 'tree', 'dog'}

Word to Index Mapping: {'mat': 0, 'the': 1, 'bird': 2, 'hat': 3, 'on': 4, 'in': 5, 'cat': 6, 'tree': 7, 'dog': 8}

One-Hot Encoded Matrix:

cat: [0, 0, 0, 0, 0, 0, 1, 0, 0]

in: [0, 0, 0, 0, 0, 1, 0, 0, 0]

the: [0, 1, 0, 0, 0, 0, 0, 0, 0]

hat: [0, 0, 0, 1, 0, 0, 0, 0, 0]

dog: [0, 0, 0, 0, 0, 0, 0, 0, 1]

on: [0, 0, 0, 0, 1, 0, 0, 0, 0]

the: [0, 1, 0, 0, 0, 0, 0, 0, 0]

mat: [1, 0, 0, 0, 0, 0, 0, 0, 0]

bird: [0, 0, 1, 0, 0, 0, 0, 0, 0]

in: [0, 0, 0, 0, 0, 1, 0, 0, 0]

the: [0, 1, 0, 0, 0, 0, 0, 0, 0]

tree: [0, 0, 0, 0, 0, 0, 0, 1, 0]1.2 Bag of Word (Bow)

Bag-of-Words (BoW) is a text representation technique that represents a document as an unordered set of words and their respective frequencies. It discards the word order and captures the frequency of each word in the document, creating a vector representation. Limitations are as follows:

- Ignores the order of words in the document: Causes loss of sequential information and context

- Sparse representations make it Memory intensive: Many elements are zero resulting in Computational inefficiency with large datasets.

Python from sklearn.feature_extraction.text import CountVectorizer documents = ["This is the first document.", "This document is the second document.", "And this is the third one.", "Is this the first document?"] vectorizer = CountVectorizer() X = vectorizer.fit_transform(documents) feature_names = vectorizer.get_feature_names_out() print("Bag-of-Words Matrix:") print(X.toarray()) print("Vocabulary (Feature Names):", feature_names) Output:

Bag-of-Words Matrix:

[[0 1 1 1 0 0 1 0 1]

[0 2 0 1 0 1 1 0 1]

[1 0 0 1 1 0 1 1 1]

[0 1 1 1 0 0 1 0 1]]

Vocabulary (Feature Names): ['and' 'document' 'first' 'is' 'one' 'second' 'the' 'third' 'this']

1.3 Term frequency-inverse document frequency (TF-IDF)

Term Frequency-Inverse Document Frequency, commonly known as TF-IDF, is a numerical statistic that reflects the importance of a word in a document relative to a collection of documents (corpus). It is widely used in natural language processing and information retrieval to evaluate the significance of a term within a specific document in a larger corpus. TF-IDF consists of two components:

- Term Frequency (TF): Measures how often a term (word) appears in a document.

- Inverse Document Frequency (IDF): Measures the importance of a term across a collection of documents.

The TF-IDF score for a term t in a document d is then given by multiplying the TF and IDF values:

TF-IDF(t,d,D)=TF(t,d)×IDF(t,D)

Where:

Term Frequency (TF): \text{TF}(t,d) = \frac{\text{Total number of times term } t \text{ appears in document } d}{\text{Total number of terms in document } d}

Inverse Document Frequency (IDF): \text{IDF}(t,D) = \log\left(\frac{\text{Total documents }}{\text{Number of documents containing term t}}\right)

The higher the TF-IDF score for a term in a document, the more important that term is to that document within the context of the entire corpus. This weighting scheme helps in identifying and extracting relevant information from a large collection of documents, and it is commonly used in text mining, information retrieval, and document clustering.

Steps are as follows:

- Define a set of sample documents.

- Use TfidfVectorizer to transform these documents into a TF-IDF matrix.

- Extract and print the TF-IDF values for each word in each document.

- This statistical measure helps assess the importance of words in a document relative to their frequency across a collection of documents,

- Helps in information retrieval and text analysis tasks.

Python from sklearn.feature_extraction.text import TfidfVectorizer documents = [ "The quick brown fox jumps over the lazy dog.", "A journey of a thousand miles begins with a single step." ] vectorizer = TfidfVectorizer() tfidf_matrix = vectorizer.fit_transform(documents) feature_names = vectorizer.get_feature_names_out() tfidf_values = {} for doc_index, doc in enumerate(documents): feature_index = tfidf_matrix[doc_index, :].nonzero()[1] tfidf_doc_values = zip(feature_index, [tfidf_matrix[doc_index, x] for x in feature_index]) tfidf_values[doc_index] = {feature_names[i]: value for i, value in tfidf_doc_values} for doc_index, values in tfidf_values.items(): print(f"Document {doc_index + 1}:") for word, tfidf_value in values.items(): print(f"{word}: {tfidf_value}") print("\n") Output:

Document 1:

dog: 0.3404110310756642

lazy: 0.3404110310756642

over: 0.3404110310756642

jumps: 0.3404110310756642

fox: 0.3404110310756642

brown: 0.3404110310756642

quick: 0.3404110310756642

the: 0.43455990318254417

Document 2:

step: 0.3535533905932738

single: 0.3535533905932738

with: 0.3535533905932738

begins: 0.3535533905932738

miles: 0.3535533905932738

thousand: 0.3535533905932738

of: 0.3535533905932738

journey: 0.3535533905932738

Some of the disadvantages of TF-IDF are:

- Inability in Capturing context: Doesn't consider semantic relationships in words.

- Sensitivity to Document Length: Longer documents have higher overall term frequencies, potentially biasing TF-IDF towards longer documents.

2. Neural Approach

2.1 Word2Vec

Word2Vec is a neural approach for generating word embeddings. It belongs to the family of neural word embedding techniques and specifically falls under the category of distributed representation models. It is a popular technique in natural language processing (NLP).

- Represent words as continuous vector spaces.

- Aim: Capture the semantic relationships between words by mapping them to high-dimensional vectors.

- Words with similar meanings should have similar vector representations. Every word is assigned a vector. We start with either a random or one-hot vector.

There are two neural embedding methods for Word2Vec: Continuous Bag of Words (CBOW) and Skip-gram.

2.2 Continuous Bag of Words(CBOW)

Continuous Bag of Words (CBOW) is a type of neural network architecture used in the Word2Vec model. The primary objective of CBOW is to predict a target word based on its context, which consists of the surrounding words in a given window. Given a sequence of words in a context window, the model is trained to predict the target word at the center of the window.

- Feedforward neural network with a single hidden layer.

- The input layer, hidden layer, and output layer represent the context words, learned continuous vectors or embeddings, and the target word.

- Useful for learning distributed representations of words in a continuous vector space.

The hidden layer contains the continuous vector representations (word embeddings) of the input words.

- The weights between the input layer and the hidden layer are learned during training.

- The dimensionality of the hidden layer represents the size of the word embeddings (the continuous vector space).

Python import torch import torch.nn as nn import torch.optim as optim # Define CBOW model class CBOWModel(nn.Module): def __init__(self, vocab_size, embed_size): super(CBOWModel, self).__init__() self.embeddings = nn.Embedding(vocab_size, embed_size) self.linear = nn.Linear(embed_size, vocab_size) def forward(self, context): context_embeds = self.embeddings(context).sum(dim=1) output = self.linear(context_embeds) return output context_size = 2 raw_text = "word embeddings are awesome" tokens = raw_text.split() vocab = set(tokens) word_to_index = {word: i for i, word in enumerate(vocab)} data = [] for i in range(2, len(tokens) - 2): context = [word_to_index[word] for word in tokens[i - 2:i] + tokens[i + 1:i + 3]] target = word_to_index[tokens[i]] data.append((torch.tensor(context), torch.tensor(target))) # Hyperparameters vocab_size = len(vocab) embed_size = 10 learning_rate = 0.01 epochs = 100 # Initialize CBOW model cbow_model = CBOWModel(vocab_size, embed_size) criterion = nn.CrossEntropyLoss() optimizer = optim.SGD(cbow_model.parameters(), lr=learning_rate) # Training loop for epoch in range(epochs): total_loss = 0 for context, target in data: optimizer.zero_grad() output = cbow_model(context) loss = criterion(output.unsqueeze(0), target.unsqueeze(0)) loss.backward() optimizer.step() total_loss += loss.item() print(f"Epoch {epoch + 1}, Loss: {total_loss}") # Example usage word_to_lookup = "embeddings" word_index = word_to_index[word_to_lookup] embedding = cbow_model.embeddings(torch.tensor([word_index])) print(f"Embedding for '{word_to_lookup}': {embedding.detach().numpy()}") Output:

Embedding for 'embeddings': [[-2.7053456 2.1384873 0.6417674 1.2882394 0.53470695 0.5651745

0.64166373 -1.1691749 0.32658175 -0.99961764]]

2.3 Skip-Gram

The Skip-Gram model learns distributed representations of words in a continuous vector space. The main objective of Skip-Gram is to predict context words (words surrounding a target word) given a target word. This is the opposite of the Continuous Bag of Words (CBOW) model, where the objective is to predict the target word based on its context. It is shown that this method produces more meaningful embeddings.

- Output: Trained vectors of each word after many iterations through the corpus.

- Preserve syntactical or semantic information, Converted to lower dimensions.

- Similar meaning (semantic info) vectors are placed close to each other in space.

- vector_size parameter controls the dimensionality of the word vectors, and you can adjust other parameters such as window.

Note: Word2Vec models can perform better with larger datasets. If you have a large corpus, you might achieve more meaningful word embeddings.

Python !pip install gensim from gensim.models import Word2Vec from nltk.tokenize import word_tokenize import nltk nltk.download('punkt') sample = "Word embeddings are dense vector representations of words." tokenized_corpus = word_tokenize(sample.lower()) skipgram_model = Word2Vec(sentences=[tokenized_corpus], vector_size=100, window=5, sg=1, min_count=1, workers=4) # Training skipgram_model.train([tokenized_corpus], total_examples=1, epochs=10) skipgram_model.save("skipgram_model.model") loaded_model = Word2Vec.load("skipgram_model.model") vector_representation = loaded_model.wv['word'] print("Vector representation of 'word':", vector_representation) Output:

Vector representation of 'word': [-9.5800208e-03 8.9437785e-03 4.1664648e-03 9.2367809e-03

6.6457358e-03 2.9233587e-03 9.8055992e-03 -4.4231843e-03

-6.8048164e-03 4.2256550e-03 3.7299085e-03 -5.6668529e-03

--------------------------------------------------------------

2.8835384e-03 -1.5386029e-03 9.9318363e-03 8.3507905e-03

2.4184163e-03 7.1170190e-03 5.8888551e-03 -5.5787875e-03]

The choice between CBOW and Skip-gram depends on data and the task.

- CBOW might be preferred when training resources are limited, and capturing syntactic information is important.

- Skip-gram is chosen when semantic relationships and the representation of rare words are important.

3. Pretrained Word-Embedding

Pre-trained word embeddings are representations of words that are learned from large corpora and are made available for reuse in various Natural Language Processing (NLP) tasks. These embeddings capture semantic relationships between words, allowing the model to understand similarities and relationships between different words in a meaningful way.

3.1 GloVe

GloVe is trained on global word co-occurrence statistics. It leverages the global context to create word embeddings that reflect the overall meaning of words based on their co-occurrence probabilities. this method, we take the corpus and iterate through it and get the co-occurrence of each word with other words in the corpus. We get a co-occurrence matrix through this. The words which occur next to each other get a value of 1, if they are one word apart then 1/2, if two words apart then 1/3 and so on.

Let's see how the matrix is created. Corpus:

It is a nice evening.

Good Evening!

Is it a nice evening?

| | it | is | a | nice | evening | good |

|---|

| it | 0 | | | | | |

|---|

| is | 1+1 | 0 | | | | |

|---|

| a | 1/2+1 | 1+1/2 | 0 | | | |

|---|

| nice | 1/3+1/2 | 1/2+1/3 | 1+1 | 0 | | |

|---|

| evening | 1/4+1/3 | 1/3+1/4 | 1/2+1/2 | 1+1 | 0 | |

|---|

| good | 0 | 0 | 0 | 0 | 1 | 0 |

|---|

The upper half of the matrix will be a reflection of the lower half. We can consider a window frame as well to calculate the co-occurrences by shifting the frame till the end of the corpus. This helps gather information about the context in which the word is used.

- Vectors for each word is assigned randomly.

- Take two pairs of vectors and see closeness in space.

- If they occur together more often or have a higher value in the co-occurrence matrix and are far apart in space then they are brought close to each other.

- If they are close to each other but are rarely or not frequently used together then they are moved further apart in space.

- Output: Vector space representation that approximates the information from the co-occurrence matrix.

You can refer to the library used in this approach: Gensim

Python from gensim.models import KeyedVectors from gensim.downloader import load glove_model = load('glove-wiki-gigaword-50') word_pairs = [('learn', 'learning'), ('india', 'indian'), ('fame', 'famous')] # Compute similarity for each pair of words for pair in word_pairs: similarity = glove_model.similarity(pair[0], pair[1]) print(f"Similarity between '{pair[0]}' and '{pair[1]}' using GloVe: {similarity:.3f}") Output:

Similarity between 'learn' and 'learning' using GloVe: 0.802

Similarity between 'india' and 'indian' using GloVe: 0.865

Similarity between 'fame' and 'famous' using GloVe: 0.589

3.2 Fasttext

Developed by Facebook, FastText extends Word2Vec by representing words as bags of character n-grams. This approach is particularly useful for handling out-of-vocabulary words and capturing morphological variations.

Python import gensim.downloader as api fasttext_model = api.load("fasttext-wiki-news-subwords-300") ## Load the pre-trained fastText model word_pairs = [('learn', 'learning'), ('india', 'indian'), ('fame', 'famous')] # Compute similarity for each pair of words for pair in word_pairs: similarity = fasttext_model.similarity(pair[0], pair[1]) print(f"Similarity between '{pair[0]}' and '{pair[1]}' using FastText: {similarity:.3f}") Output:

Similarity between 'learn' and 'learning' using Word2Vec: 0.642

Similarity between 'india' and 'indian' using Word2Vec: 0.708

Similarity between 'fame' and 'famous' using Word2Vec: 0.519

3.3 BERT (Bidirectional Encoder Representations from Transformers)

BERT is a transformer-based model that learns contextualized embeddings for words. It considers the entire context of a word by considering both left and right contexts, resulting in embeddings that capture rich contextual information.

Python from transformers import BertTokenizer, BertModel import torch # Load pre-trained BERT model and tokenizer model_name = 'bert-base-uncased' tokenizer = BertTokenizer.from_pretrained(model_name) model = BertModel.from_pretrained(model_name) word_pairs = [('learn', 'learning'), ('india', 'indian'), ('fame', 'famous')] # Compute similarity for each pair of words for pair in word_pairs: tokens = tokenizer(pair, return_tensors='pt') with torch.no_grad(): outputs = model(**tokens) # Extract embeddings for the [CLS] token cls_embedding = outputs.last_hidden_state[:, 0, :] similarity = torch.nn.functional.cosine_similarity(cls_embedding[0], cls_embedding[1], dim=0) print(f"Similarity between '{pair[0]}' and '{pair[1]}' using BERT: {similarity:.3f}") Output:

Similarity between 'learn' and 'learning' using BERT: 0.930

Similarity between 'india' and 'indian' using BERT: 0.957

Similarity between 'fame' and 'famous' using BERT: 0.956

Considerations for Deploying Word Embedding Models

- You need to use the exact same pipeline during deploying your model as were used to create the training data for the word embedding.

- You can replace "OOV" words with "UNK" or unknown and then handle them separately.

- Dimension mis-match: Ensure to use the same dimensions throughout during training and inference.

Advantages and Disadvantage of Word Embeddings

Advantages

- It is much faster to train than hand build models like WordNet

- Almost all modern NLP applications start with an embedding layer.

- It Stores an approximation of meaning.

Disadvantages

- It can be memory intensive.

- It is corpus dependent. Any underlying bias will affect your model.

- It cannot distinguish between homophones. E.g.: brake/break, cell/sell, etc.

Similar Reads

Deep Learning Tutorial Deep Learning tutorial covers the basics and more advanced topics, making it perfect for beginners and those with experience. Whether you're just starting or looking to expand your knowledge, this guide makes it easy to learn about the different technologies of Deep Learning.Deep Learning is a branc

5 min read

Introduction to Deep Learning

Basic Neural Network

Activation Functions

Artificial Neural Network

Classification

Regression

Hyperparameter tuning

Introduction to Convolution Neural Network

Introduction to Convolution Neural NetworkConvolutional Neural Network (CNN) is an advanced version of artificial neural networks (ANNs), primarily designed to extract features from grid-like matrix datasets. This is particularly useful for visual datasets such as images or videos, where data patterns play a crucial role. CNNs are widely us

8 min read

Digital Image Processing BasicsDigital Image Processing means processing digital image by means of a digital computer. We can also say that it is a use of computer algorithms, in order to get enhanced image either to extract some useful information. Digital image processing is the use of algorithms and mathematical models to proc

7 min read

Difference between Image Processing and Computer VisionImage processing and Computer Vision both are very exciting field of Computer Science. Computer Vision: In Computer Vision, computers or machines are made to gain high-level understanding from the input digital images or videos with the purpose of automating tasks that the human visual system can do

2 min read

CNN | Introduction to Pooling LayerPooling layer is used in CNNs to reduce the spatial dimensions (width and height) of the input feature maps while retaining the most important information. It involves sliding a two-dimensional filter over each channel of a feature map and summarizing the features within the region covered by the fi

5 min read

CIFAR-10 Image Classification in TensorFlowPrerequisites:Image ClassificationConvolution Neural Networks including basic pooling, convolution layers with normalization in neural networks, and dropout.Data Augmentation.Neural Networks.Numpy arrays.In this article, we are going to discuss how to classify images using TensorFlow. Image Classifi

8 min read

Implementation of a CNN based Image Classifier using PyTorchIntroduction: Introduced in the 1980s by Yann LeCun, Convolution Neural Networks(also called CNNs or ConvNets) have come a long way. From being employed for simple digit classification tasks, CNN-based architectures are being used very profoundly over much Deep Learning and Computer Vision-related t

9 min read

Convolutional Neural Network (CNN) ArchitecturesConvolutional Neural Network(CNN) is a neural network architecture in Deep Learning, used to recognize the pattern from structured arrays. However, over many years, CNN architectures have evolved. Many variants of the fundamental CNN Architecture This been developed, leading to amazing advances in t

11 min read

Object Detection vs Object Recognition vs Image SegmentationObject Recognition: Object recognition is the technique of identifying the object present in images and videos. It is one of the most important applications of machine learning and deep learning. The goal of this field is to teach machines to understand (recognize) the content of an image just like

5 min read

YOLO v2 - Object DetectionIn terms of speed, YOLO is one of the best models in object recognition, able to recognize objects and process frames at the rate up to 150 FPS for small networks. However, In terms of accuracy mAP, YOLO was not the state of the art model but has fairly good Mean average Precision (mAP) of 63% when

7 min read

Recurrent Neural Network

Natural Language Processing (NLP) TutorialNatural Language Processing (NLP) is the branch of Artificial Intelligence (AI) that gives the ability to machine understand and process human languages. Human languages can be in the form of text or audio format.Applications of NLPThe applications of Natural Language Processing are as follows:Voice

5 min read

NLTK - NLPNatural Language Toolkit (NLTK) is one of the largest Python libraries for performing various Natural Language Processing tasks. From rudimentary tasks such as text pre-processing to tasks like vectorized representation of text - NLTK's API has covered everything. In this article, we will accustom o

5 min read

Word Embeddings in NLPWord Embeddings are numeric representations of words in a lower-dimensional space, that capture semantic and syntactic information. They play a important role in Natural Language Processing (NLP) tasks. Here, we'll discuss some traditional and neural approaches used to implement Word Embeddings, suc

14 min read

Introduction to Recurrent Neural NetworksRecurrent Neural Networks (RNNs) differ from regular neural networks in how they process information. While standard neural networks pass information in one direction i.e from input to output, RNNs feed information back into the network at each step.Imagine reading a sentence and you try to predict

10 min read

Recurrent Neural Networks ExplanationToday, different Machine Learning techniques are used to handle different types of data. One of the most difficult types of data to handle and the forecast is sequential data. Sequential data is different from other types of data in the sense that while all the features of a typical dataset can be a

8 min read

Sentiment Analysis with an Recurrent Neural Networks (RNN)Recurrent Neural Networks (RNNs) are used in sequence tasks such as sentiment analysis due to their ability to capture context from sequential data. In this article we will be apply RNNs to analyze the sentiment of customer reviews from Swiggy food delivery platform. The goal is to classify reviews

5 min read

Short term MemoryIn the wider community of neurologists and those who are researching the brain, It is agreed that two temporarily distinct processes contribute to the acquisition and expression of brain functions. These variations can result in long-lasting alterations in neuron operations, for instance through act

5 min read

What is LSTM - Long Short Term Memory?Long Short-Term Memory (LSTM) is an enhanced version of the Recurrent Neural Network (RNN) designed by Hochreiter and Schmidhuber. LSTMs can capture long-term dependencies in sequential data making them ideal for tasks like language translation, speech recognition and time series forecasting. Unlike

5 min read

Long Short Term Memory Networks ExplanationPrerequisites: Recurrent Neural Networks To solve the problem of Vanishing and Exploding Gradients in a Deep Recurrent Neural Network, many variations were developed. One of the most famous of them is the Long Short Term Memory Network(LSTM). In concept, an LSTM recurrent unit tries to "remember" al

7 min read

LSTM - Derivation of Back propagation through timeLong Short-Term Memory (LSTM) are a type of neural network designed to handle long-term dependencies by handling the vanishing gradient problem. One of the fundamental techniques used to train LSTMs is Backpropagation Through Time (BPTT) where we have sequential data. In this article we see how BPTT

4 min read

Text Generation using Recurrent Long Short Term Memory NetworkLSTMs are a type of neural network that are well-suited for tasks involving sequential data such as text generation. They are particularly useful because they can remember long-term dependencies in the data which is crucial when dealing with text that often has context that spans over multiple words

4 min read