Hadoop - Mapper In MapReduce

Last Updated : 17 Apr, 2023

Map-Reduce is a programming model that is mainly divided into two phases Map Phase and Reduce Phase. It is designed for processing the data in parallel which is divided on various machines(nodes). The Hadoop Java programs are consist of Mapper class and Reducer class along with the driver class. Hadoop Mapper is a function or task which is used to process all input records from a file and generate the output which works as input for Reducer. It produces the output by returning new key-value pairs. The input data has to be converted to key-value pairs as Mapper can not process the raw input records or tuples(key-value pairs). The mapper also generates some small blocks of data while processing the input records as a key-value pair. we will discuss the various process that occurs in Mapper, There key features and how the key-value pairs are generated in the Mapper.

Let's understand the Mapper in Map-Reduce:

Mapper is a simple user-defined program that performs some operations on input-splits as per it is designed. Mapper is a base class that needs to be extended by the developer or programmer in his lines of code according to the organization's requirements. input and output type need to be mentioned under the Mapper class argument which needs to be modified by the developer.

For Example:

Class MyMappper extends Mapper<KEYIN,VALUEIN,KEYOUT,VALUEOUT>

Mapper is the initial line of code that initially interacts with the input dataset. suppose, If we have 100 Data-Blocks of the dataset we are analyzing then in that case there will be 100 Mapper program or process that runs in parallel on machines(nodes) and produce their own output known as intermediate output which is then stored on Local Disk, not on HDFS. The output of the mapper act as input for Reducer which performs some sorting and aggregation operation on data and produces the final output.

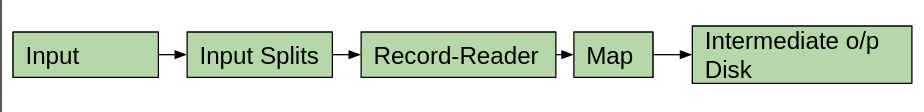

The Mapper mainly consists of 5 components: Input, Input Splits, Record Reader, Map, and Intermediate output disk. The Map Task is completed with the contribution of all this available component.

- Input: Input is records or the datasets that are used for analysis purposes. This Input data is set out with the help of InputFormat. It helps in identifying the location of the Input data which is stored in HDFS(Hadoop Distributed File System).

- Input-Splits: These are responsible for converting the physical input data to some logical form so that Hadoop Mapper can easily handle it. Input-Splits are generated with the help of InputFormat. A large data set is divided into many input-splits which depend on the size of the input dataset. There will be a separate Mapper assigned for each input-splits. Input-Splits are only referencing to the input data, these are not the actual data. DataBlocks are not the only factor that decides the number of input-splits in a Map-Reduce. we can manually configure the size of input-splits in mapred.max.split.size property while the job is executing. All of these input-splits are utilized by each of the data blocks. The size of input splits is measured in bytes. Each input-split is stored at some memory location (Hostname Strings). Map-Reduce places map tasks near the location of the split as close as it is possible. The input-split with the larger size executed first so that the job-runtime can be minimized.

- Record-Reader: Record-Reader is the process which deals with the output obtained from the input-splits and generates it's own output as key-value pairs until the file ends. Each line present in a file will be assigned with the Byte-Offset with the help of Record-Reader. By-default Record-Reader uses TextInputFormat for converting the data obtained from the input-splits to the key-value pairs because Mapper can only handle key-value pairs.

- Map: The key-value pair obtained from Record-Reader is then feed to the Map which generates a set of pairs of intermediate key-value pairs.

- Intermediate output disk: Finally, the intermediate key-value pair output will be stored on the local disk as intermediate output. There is no need to store the data on HDFS as it is an intermediate output. If we store this data onto HDFS then the writing cost will be more because of it's replication feature. It also increases its execution time. If somehow the executing job is terminated then, in that case, cleaning up this intermediate output available on HDFS is also difficult. The intermediate output is always stored on local disk which will be cleaned up once the job completes its execution. On local disk, this Mapper output is first stored in a buffer whose default size is 100MB which can be configured with io.sort.mb property. The output of the mapper can be written to HDFS if and only if the job is Map job only, In that case, there will be no Reducer task so the intermediate output is our final output which can be written on HDFS. The number of Reducer tasks can be made zero manually with job.setNumReduceTasks(0). This Mapper output is of no use for the end-user as it is a temporary output useful for Reducer only.

How to calculate the number of Mappers In Hadoop:

The number of blocks of input file defines the number of map-task in the Hadoop Map-phase, which can be calculated with the help of the below formula.

Mapper = (total data size)/ (input split size)

For Example: For a file of size 10TB(Data Size) where the size of each data block is 128 MB(input split size) the number of Mappers will be around 81920.